Retrieval-augmented generation (RAG) chatbots fuse large language models (LLMs) with your actual data, so answers are smart and grounded. Instead of guessing, they retrieve real context, docs, logs, FAQs, and generate responses backed by facts with fewer hallucinations, faster iteration, and AI that behaves less like an experiment and more like a product.

However, most RAG chatbots fail in the real world because of high latency and irrelevant answers, leaving teams stuck with black-box results they can’t trace or trust.

Technical teams are rethinking how they build. Instead of duct-taping APIs and pipelines, teams can use Dataiku, The Universal AI Platform™, to design RAG chatbots that are modular, traceable, and ready for real-world use.

The Problem: Many RAG Chatbots Don't Scale

The typical DIY RAG chatbot starts simple: a vector database, a few documents, maybe LangChain. It works, until:

- Multiple knowledge banks are needed.

- Complex routing is required.

- Teams need auditability or access control.

- A hallucination or data leak triggers an internal review.

When that happens, teams either rewrite from scratch or pause GenAI deployment entirely. Neither scales.

The Fix: Modular, Governed RAG Chatbots in Dataiku

In Dataiku, you can build a RAG chatbot that combines the precision of search with the creativity of GenAI. Instead of hallucinating answers, it grounds every response in real, verified data pulled from connected sources — your docs, databases, or APIs — before generating output. This results in faster, smarter, and more reliable insights that scale across teams without compromising trust or control.

Dataiku lets teams build production-grade RAG chatbots using an agentic architecture, connected through Agent Hub and powered by the Dataiku LLM Mesh.

Here’s four core benefits that unlocks:

Modular Workflows, Maximum Velocity

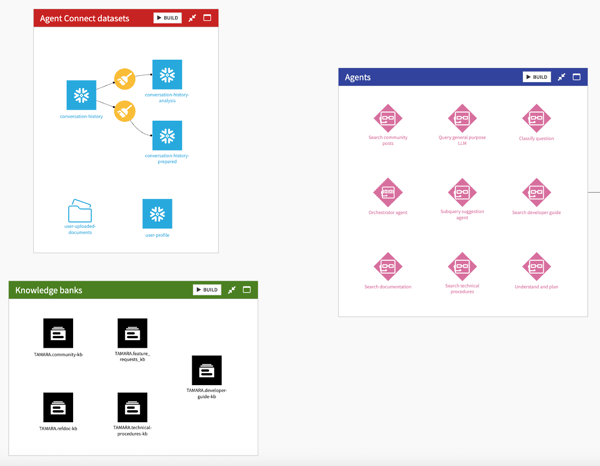

Data prep, knowledge banks, and agents live in separate Dataiku projects, giving each team access only to what they need. This modular setup makes debugging and updates faster, reduces the risk of cross-component errors, and allows parallel development across data engineers, analysts, and AI specialists. Teams move faster, collaborate smarter, and maintain control without unnecessary complexity.

Smarter Chunking = Better Context

Document ingestion adapts to the type of content. REST APIs, tutorials, patents, emails, or meeting notes can each be chunked differently, preserving structure where it matters and breaking down minimal-structure content into “children chunks” for faster search while keeping parent-level context for broader understanding. This results in more accurate, relevant AI responses and fewer hallucinations.

LLM Guardrails Are Built In

PII detection, prompt filtering, and token cost estimation are baked into Dataiku. Teams can experiment freely and deploy with confidence, knowing sensitive data stays protected and AI behavior is traceable. This reduces compliance overhead, keeps innovation safe, and accelerates AI adoption across the organization.

Visual or Code-Based Agents

Prototype with low-code visual agents, then scale to code-based agents when workflows demand advanced logic, complex API calls, or memory chaining. In Dataiku, teams can leverage one platform, different ways of building, all with seamless handoffs. This flexibility empowers all skill levels, keeps teams aligned, and reduces redevelopment, making prototyping to production a smooth, efficient journey.

Architecture in Action: How Teams Build in Dataiku

Most production-ready RAG chatbots built in Dataiku follow a familiar pattern.

It all begins with curated Knowledge Banks (KBs). We can enforce document-level security in KBs and data teams can use automated workflows to clean, chunk, and enrich data, ensuring each KB is accurate, governed, and ready for retrieval.

From there, dedicated KB retrieval agents are created and linked to each KB. These agents are responsible for searching and synthesizing responses from their assigned domain, serving users such as developers, admins, or support staff with contextual answers and source citations.

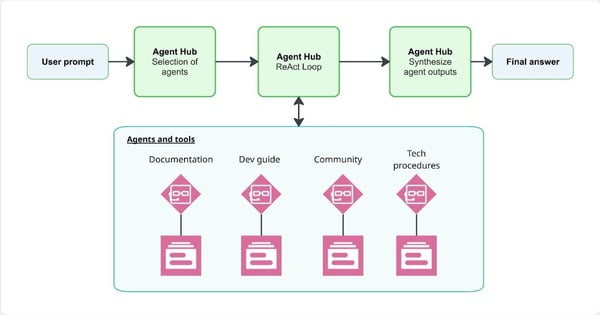

And behind the scenes, Agent Hub is what binds it all together. This feature enables the seamless orchestration of multiple agents, whether they reside in the same project or across different ones. It offers a unified interface for routing, managing, and scaling agent workflows, while maintaining modularity and access control across teams.

The KB retrieval agents exposed in Agent Hub are orchestrated. The system prompt can be customized so that it analyzes each user query to determine its intent, complexity, and target audience. Based on this analysis, the orchestrator selects the most appropriate KB retrieval agent to handle the response. If needed, it can also reformulate the query to better match indexed content or trigger fallback logic to a general-purpose LLM when no strong match is found.

A way to increase the relevancy of the answer is to add a subquery suggestion in the system instructions to suggest follow-up prompts that reframe the question, clarifying the users’ intent.

The clarified user query is then passed on to the head LLM that "understands and plans,” meaning it assesses the agents at its disposal, calls them, and reasons, until it has an adequate answer passed on to the user. Crucially, users can trust these answers as they are sourced and they can see the exact documents used to craft this answer.

Crucially, teams close the loop. User feedback is collected early and often to create a ground truth dataset, enabling continuous evaluation of agent accuracy and performance. The result? RAG chatbots don’t just respond, they respond with traceability, confidence, and context.

From Launch to Longevity: Train, Evaluate, Iterate

Building a RAG chatbot is only the beginning. The real measure of success comes from what happens after the system is launched. Standing up the technical architecture is relatively straightforward, but sustaining a reliable, trustworthy, and continuously improving agent requires intentional investment in user education, consistent evaluation, and ongoing iteration.

Once the initial excitement fades, organizations often realize that long-term performance depends on how people use the agent, how effectively feedback is captured, and how quickly the system adapts to real-world usage. Discover below three foundational disciplines required to sustain and improve a RAG chatbot after launch:

1. Educate Users on Best Practices

A well-trained agent is only as strong as the habits of its users. Most performance issues do not come from model limitations but from how people prompt it.

Encourage open-ended, exploratory questions (“What are the steps for creating…?”) over binary prompts (“Is it A or B?”). When users understand that LLMs will confidently choose an answer even when neither option is correct, they naturally learn to design better prompts and verify responses.

In Dataiku, this education can be built right into the experience. Teams can embed quick tips or contextual guidance inside the Dataiku Answers interface, turning every query into a micro-training opportunity. Over time, this helps democratize AI literacy across the org, empowering users to interact with agents critically, not blindly. To reinforce the confidence of the users in their prompt, Dataiku lets users:

- Access a library of “ready to use” prompts to jumpstart projects.

- Get help from a built-in “prompt assistant” designed to rephrase prompts for effectiveness.

- Review example prompts and outputs side-by-side to understand what “good” looks like, and iterate with clarity.

This way, the user learns how to build robust prompts using reliable tools and resources. The smartest agents come from teams that treat prompting as a skill, not a guessing game.

2. Collect Feedback Early

In the early days of deployment, feedback is your gold mine. Every misfire, false positive, or off-topic response is a data point waiting to make the agent smarter.

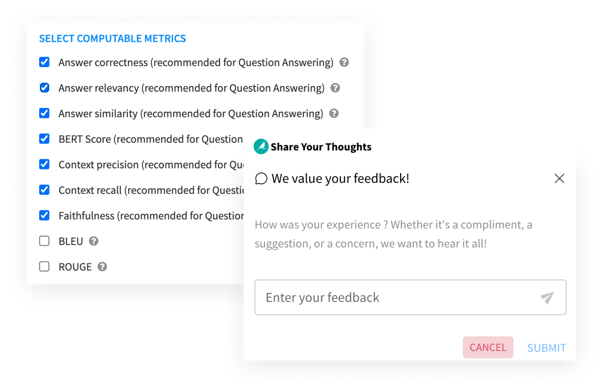

With Dataiku, teams can activate built-in feedback capture inside the Dataiku Answers interface, allowing users to upvote or flag results in real time. Those qualitative reactions are instantly translated into measurable signals, accuracy scores, latency trends, or retrieval quality metrics, that feed directly into evaluation pipelines.

The result? Teams can identify systemic issues early (like misrouted queries or low-confidence answers) and iterate before small flaws scale into major blockers. Continuous feedback transforms “chatbot drift” into “chatbot evolution.” Don’t wait for complaints, build feedback loops from day one and make improvement part of the system’s DNA.

3. Benchmark Performance Over Time

Once feedback data starts flowing, it becomes your truth benchmark. Every positively rated answer helps form a reliable “ground truth” dataset, the backbone of continuous evaluation.

This allows teams to compare architectures, retrievers, chunking strategies, or agent routing logic side by side. Over time, you can visualize how updates to chunking rules or retrieval depth actually impact precision and recall.

Negative-rated answers are equally valuable. By clustering and reviewing them, teams uncover systemic blind spots: missing content in the knowledge bank, unclear context windows, or misclassified intents. Each insight can directly inform design changes in your RAG setup, from refining prompt templates to rethinking agent orchestration.

With this discipline, RAG chatbots stop being static utilities and become adaptive systems, ones that learn, self-correct, and scale responsibly. Benchmarking isn’t about scorekeeping; it’s about building a feedback flywheel that compounds accuracy over time.

RAG-Powered Use Cases in Production

RAG has become a core capability in mission-critical workflows, giving employees fast, reliable access to the information they need. Dataiku customers are deploying these RAG chatbots in production to streamline daily operations.

Health and Safety Support App

LG Chem built a RAG‑powered web app to serve as the first line of support for employees looking for health and safety documentation. By automating answers to common queries, they cut down on manual search time and freed subject-matter experts to focus on complex or high‑risk issues.

Internal Process Support Agent

Another enterprise in the fashion sector deployed a chatbot to give employees instant access to corporate policies, procurement guidelines, and store operations manuals. Rather than manually sifting through documents, people now get relevant passages and guidance immediately, boosting efficiency and reducing friction in everyday workflows.

These aren’t just proof‑of‑concepts. The shift is toward fully operational agents that go beyond retrieval. Ones that reason, automate and integrate tightly with internal systems. These agents leverage Dataiku’s features like advanced data preparation (OCR, hierarchical chunking, document‑aware segmentation), multi‑agent orchestration with Agent Hub, governance tools to control data access, and monitoring to catch hallucinations or stale info.

In each case, the benefit is clear: time saved, accuracy improved, and knowledge made accessible automatically.